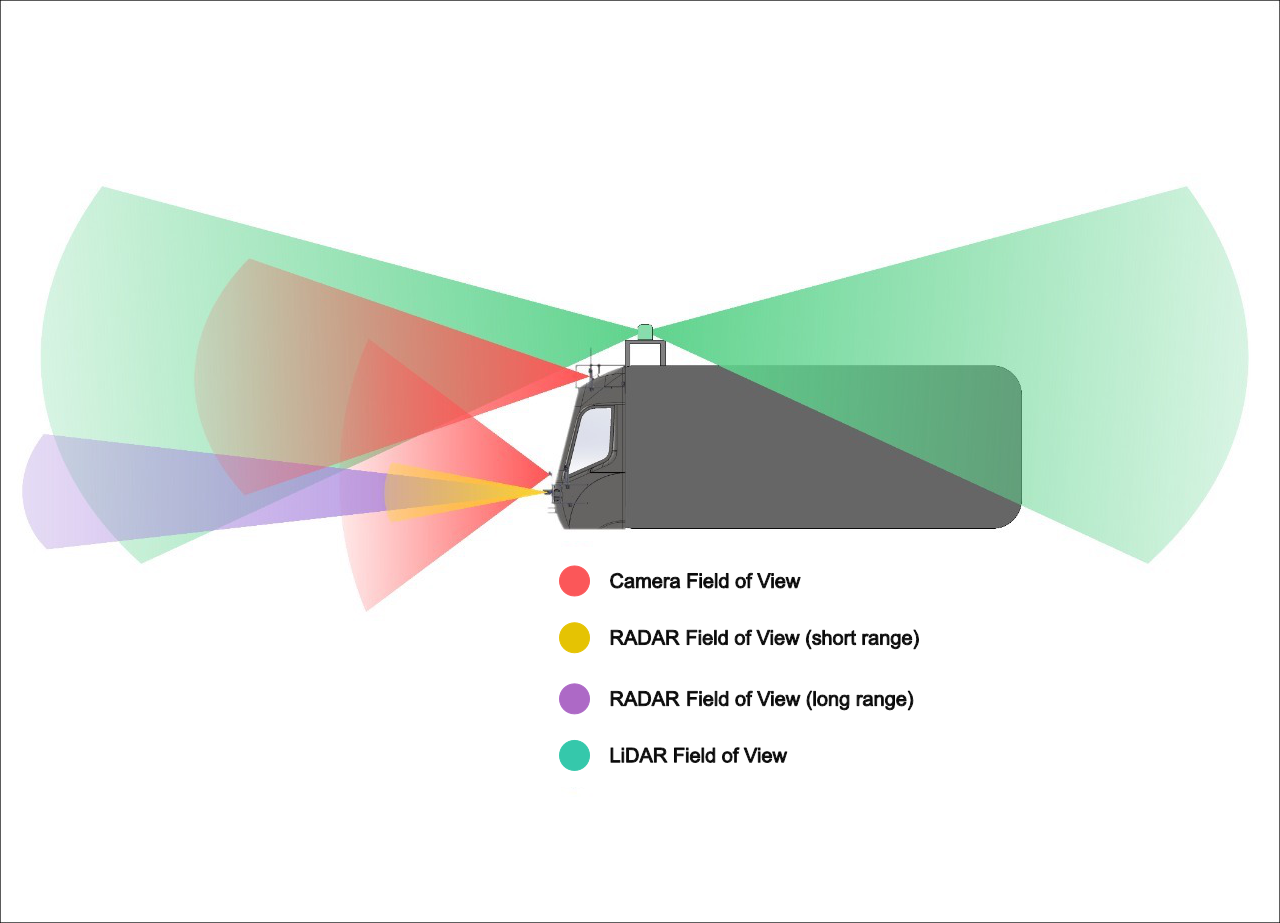

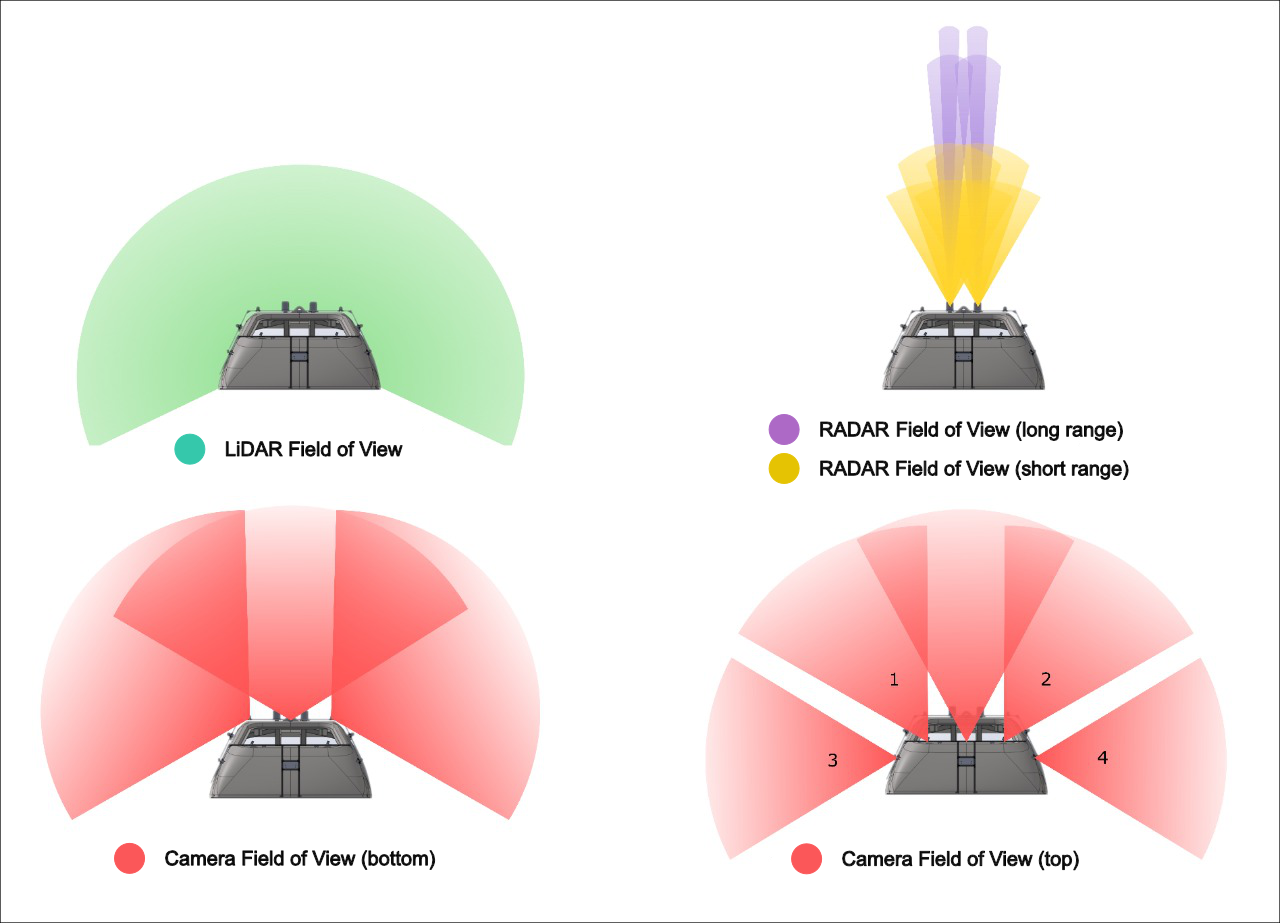

The autonomous tram system utilizes a range of sensors for safe navigation in mixed traffic. Eight external cameras monitor surroundings, while a multidirectional lidar creates 3D maps for spatial awareness. Radar units detect the speed and distance of objects, even in poor weather, and a GNSS-RTK provides precise location data. An IMU tracks the tram's movement, ensuring stable navigation, and an in-cabin camera monitors the driver’s alertness. Together, these sensors enable real-time decision-making, collision avoidance, and autonomous operation in dynamic urban environments.

The AI system in the autonomous tram leverages advanced models in a complex pipeline that integrates vision, positioning, and decision-making. The vision system, powered by deep learning, processes inputs from cameras, lidar, and radar to recognize objects, detect obstacles, and interpret traffic conditions. The high-precision positioning system, supported by GNSS-RTK and IMU data, provides real-time localization, ensuring the tram’s accurate spatial awareness. Finally, the decision system uses machine learning algorithms to analyze environmental data, plan maneuvers, and manage speed, making split-second adjustments. Together, these AI-driven components enable safe, efficient navigation within mixed-traffic environments through adaptive, data-driven decision-making.

| Research Focus | Description |

|---|---|

| Traffic Sign Recognition | Detects and interprets traffic signs, enabling the tram to follow road rules in real time. |

| Rail Sign Recognition | Recognizes rail-specific signs to ensure safe operation on tram routes. |

| Landscape Segmentation | Differentiates road, rail, and surrounding areas for accurate environmental understanding. |

| Camera-based Object Detection | Identifies objects using visual data, essential for obstacle detection and path planning. |

| Lidar-based Object Detection | Maps 3D structures, detecting objects with high precision, even in low-light conditions. |

| Camera-Radar Fusion Detection | Combines camera and radar data to improve object detection accuracy in various environments. |

| Camera-Lidar Object Detection | Merges camera and lidar data for enhanced depth perception and object recognition accuracy. |

| 3D Space Object Tracking | Tracks moving objects in 3D space, predicting their paths to avoid collisions. |

| Distance and Velocity Estimation | Measures distances and speeds of nearby objects, aiding in safe maneuver planning. |

| Research Focus | Description |

|---|---|

| Real Time Positioning | Continuously updates the tram's exact location using GNSS-RTK and IMU data, crucial for precise navigation. |

| Semantic Mapping | Creates detailed maps with labeled elements (e.g., roads, rails), enabling the tram to understand and navigate its environment effectively. |

| Research Focus | Description |

|---|---|

| Railway Estimator | Predicts the tram’s position on the track using sensor data, aiding in route planning and trajectory adjustments. |

| Trajectory Prediction | Forecasts the tram’s future path based on current speed and direction to optimize maneuvering and collision avoidance. |

| Interacting Multiple Model | Combines multiple models to predict and adapt to different environmental scenarios. |

| Risk Assessment | Evaluates potential hazards by analyzing environmental data, enabling proactive safety measures and decision-making. |

| Path Planning | Determines the optimal route by considering obstacles, track conditions, and tram capabilities for efficient navigation. |

| Adaptive Cruise Control | Automatically adjusts speed to maintain safe distances and optimal speed based on surrounding traffic conditions. |

| Collision Avoidance | Detects and avoids potential collisions by predicting obstacles and executing evasive actions in real time. |

| Emergency Braking System | Automatically engages braking when imminent collision or danger is detected to prevent accidents. |